A while back I posted an article about two alternative options for summarizing and extracting data from around points. The two solutions that I posited where zonal statistics and neighborhood statistics. Each has its advantages and disadvantages. The standard zonal statistics tool in ArcGIS doesn't deal with overlapping polygons, which is a common problem. The neighborhood approach tends to be slow, but has the advantage that it can deal with overlaps effectively and necessary for people doing wall-to-wall predictive maps (such as species distribution modeling).

There is, however, a third option. The zonal statistics tool can be modified to accommodate overlaps. That is exactly what I have done. Below is the model that I created in Model Builder to achieve this.

I plan to have this out soon as a tool available to download. In the mean time there are a couple of key features to this model that I'd like to point out.

1) Iteration by Field Values - This step iterates through a categorical raster, lets say land cover classes, producing an output that represents to proportion cover for each type.

2) Intersect/Erase - Buffers are generated and the intersect and erase tools are used identify the "normal non-overlapping" portion of the buffer as well as the overlapping portion. Unfortunately the Erase tool requires a full ArcGIS Advanced license so I'll need to put some more thought into how to make this available to folks with lower license levels.

3) Zonal statistics - The tool performs a couple of zonal statistics operations. It sums the number of cells in each class and then sums the total number of cells to get the proportional cover.

4) Once the proportional cover has been calculated for overlapping buffer areas and non-overlapping buffer areas there is a need to put this information together. The field calculator is used with the following formula max( !Prpn_Overlap_Raster!, !Prpn!). This effectively deals with the fact that there are Null values from one output when there are data values from the other and visa versa. This seems to be an effective way of getting around trying to make field mapping work.

Look soon for a new tool to automate this process.

With this blog I intend to share GIS, remote sensing, and spatial analysis tips, experiences, and techniques with others. Most of my work is in the field of Landscape Ecology, so there is a focus on ecological applications. Postings include tips and suggestions for data processing and day-to-day GIS tasks, links to my GIS tools and approaches, and links to scientific papers that I've been involved in.

Thursday, December 15, 2016

Wednesday, December 14, 2016

Daniel's XL Toolbox for making high quality figures for journal publications

Once in a while it is nice to be able to export figures out of Microsoft Excel for publication in a scientific journal. The problem is that Excel doesn't support high enough resolution DPI to meet most journal requirements. Yes, R is a solution that give you total control over your own graphics. Another handy alternative is Daniel's XL Toolbox - https://www.xltoolbox.net/ . I've found it to be very user-friendly, and now it offers a bunch of improvements and even has its own YouTube video - https://www.youtube.com/watch?v=7QYTj7kpNiA

Once in a while it is nice to be able to export figures out of Microsoft Excel for publication in a scientific journal. The problem is that Excel doesn't support high enough resolution DPI to meet most journal requirements. Yes, R is a solution that give you total control over your own graphics. Another handy alternative is Daniel's XL Toolbox - https://www.xltoolbox.net/ . I've found it to be very user-friendly, and now it offers a bunch of improvements and even has its own YouTube video - https://www.youtube.com/watch?v=7QYTj7kpNiAClever trick for one to many spatial joins in ArcMap

I came across this post recently, and realized that I, too, was one of the many who have been doing GIS for years without realizing that this option existed. What a clever trick for working with one to many relationships.

https://esriaustraliatechblog.wordpress.com/2015/06/22/spatial-joins-hidden-trick-or-how-to-transfer-attribute-values-in-a-one-to-many-relationship/

https://esriaustraliatechblog.wordpress.com/2015/06/22/spatial-joins-hidden-trick-or-how-to-transfer-attribute-values-in-a-one-to-many-relationship/

Wednesday, December 7, 2016

Great Basin LCC Webinar is now available online

Marjorie did a wonderful job of presenting our ongoing work this past Monday on pygmy rabbits. If you didn't get a chance to watch the presentation live you can catch a recorded version here:

https://www.youtube.com/watch?v=loAHd86Fvsk&edit=vd

https://www.youtube.com/watch?v=loAHd86Fvsk&edit=vd

Wednesday, November 30, 2016

Upcoming Great Basin LCC Webinar on Pygmy Rabbit

Marjorie Matocq is presenting next Monday for the Great Basin Landscape Conservation Cooperative webinar series. Sign up at http://greatbasinlcc.org/webinar-series . If you can't make it there will be a recorded seminar available on YouTube in a couple of weeks.

Thursday, November 17, 2016

Sarah Barga wins 2nd place in GSA poster contest

It is always great news when students win awards! Recently Sarah Barga, an EECB Ph.D. student advised by Beth Leger, won 2nd place in UNR's Graduate Student Association student poster contest for her poster entitled "Creating maps of potential habitat for Great Basin forbs using herbarium data". I'm proud to say that I helped her get started with the Maxent modeling, but really, Sarah has done a tremendous amount of interesting work and is currently working on finalizing two manuscripts at the same time. The maps on the right are straight off of her poster. The different colors correspond with the amount of overlap for different top models. You can view her poster by clicking HERE and her research by visiting her website HERE .

Wednesday, November 2, 2016

website overhaul on its way

Our lab is overhauling its website. The old and new website will have the same URL -https://naes.unr.edu/weisberg/ . We're expecting the new site to be out within the next couple of weeks. Expect an update when it is out.

Tuesday, October 25, 2016

New tool - Line Intercept Mapping for ArcGIS

I've got a new tool for displaying the results from line intercept mapping in GIS. You can download that tool HERE .

Line intercept is one of the most common sampling methods of sampling in ecology. Despite its widespread popularity a lack of tools exists for automatically importing and visualizing line interrcept data in a GIS. This tool alleviates this problem making line intercept mapping easier. The tool can be run using any of the three licensed versions of ArcGIS and does not require any extensions. The tool is capable of generating overlapping line segments. The tool does not require linear referencing because transect lines are assumed straight. The tool requires that your data be in two tables: a transect coordinate table and a start-stop table. The transect coordinate table should have four fields: a TransectID, easting (or longitude for the GCS version of the tool), northing (or latitude for the GCS version of the tool), and supposed line length (length of the line as measured in the field). The start-stop table requires four fields: a TransectID, a start distance, a stop distance, and at least one field with a descriptive attribute that you are trying to map.

Line intercept is one of the most common sampling methods of sampling in ecology. Despite its widespread popularity a lack of tools exists for automatically importing and visualizing line interrcept data in a GIS. This tool alleviates this problem making line intercept mapping easier. The tool can be run using any of the three licensed versions of ArcGIS and does not require any extensions. The tool is capable of generating overlapping line segments. The tool does not require linear referencing because transect lines are assumed straight. The tool requires that your data be in two tables: a transect coordinate table and a start-stop table. The transect coordinate table should have four fields: a TransectID, easting (or longitude for the GCS version of the tool), northing (or latitude for the GCS version of the tool), and supposed line length (length of the line as measured in the field). The start-stop table requires four fields: a TransectID, a start distance, a stop distance, and at least one field with a descriptive attribute that you are trying to map.

New paper - Bending the carbon curve: fire management for carbon resilience under climate change

Our lab has a new paper "Bending the carbon curve: fire management for carbon resilience under climate change", which is about forest management and resilience. It suggests that active management, such fuel treatments and selecting for more drought-tolerant species, has the potential to capture and retain carbon long-term. The article was published in Landscape Ecology with Louise Loudermilk as the first author and Rob Scheller, Peter Weisberg, and Alec Kretchun as co-authors. You can check out their article HERE .

Comments on Prepare Rasters for Maxent versus Find ArcGIS Rasters and Project to Template in MGET

On my page for the Prepare Rasters for Maxent Tool for ArcGIS I made a comment about its application versus another tool called Find ArcGIS Rasters and Project to Template in the Marine Geospatial Ecology Toolbox. To access that page click HERE . Here is the text of that comment:

The Marine Geospatial Ecology Tools for ArcGIS (http://mgel.env.duke.edu/mget) is an excellent toolbox that includes much of the functionality of the Prepare Rasters for Maxent toolbox. I highly recommend checking it out. In particular, check out the Find ArcGIS Rasters and Project to Template tool which will batch project and clip all rasters to a template. One primary difference between that tool and Prepare Rasters for Maxent is that Prepare Rasters for Maxent has built-in steps to resample and fill in missing data. If you know that you have gaps in your data then Prepare Rasters for Maxent may be your best bet. If you don't think that you have gaps or they are minor then consider Find ArcGIS Rasters and Project to Template as a speedier alternative. You can create a template raster by multiplying all of your rasters together in the Raster Calculator. By default this will take the intersection of all of the rasters resulting in a template that is smaller than any one of the inputs.

The Marine Geospatial Ecology Tools for ArcGIS (http://mgel.env.duke.edu/mget) is an excellent toolbox that includes much of the functionality of the Prepare Rasters for Maxent toolbox. I highly recommend checking it out. In particular, check out the Find ArcGIS Rasters and Project to Template tool which will batch project and clip all rasters to a template. One primary difference between that tool and Prepare Rasters for Maxent is that Prepare Rasters for Maxent has built-in steps to resample and fill in missing data. If you know that you have gaps in your data then Prepare Rasters for Maxent may be your best bet. If you don't think that you have gaps or they are minor then consider Find ArcGIS Rasters and Project to Template as a speedier alternative. You can create a template raster by multiplying all of your rasters together in the Raster Calculator. By default this will take the intersection of all of the rasters resulting in a template that is smaller than any one of the inputs.

Wednesday, October 19, 2016

Creating non-spatial and "not quite true" spatial figures in GIS

I recently was tasked with creating figures to show some variables collected along a trapping grid. The problem with these data were that the trapping locations were very close to one another yet the distance among the trapping grids was not. As a result, it was very nearly impossible to show meaningful differences at the scale of the entire study. In the figure on the right you can see the location of the trapping grids. Each grid had 64 stations arrayed in 16 columns and 4 rows. I've removed any geographic information that may suggest the location of this study for the sake of privacy.

The solution? What I call a "not quite true" spatial map. In the figure below we see that each bold box shows a trapping grid and each smaller box is a trapping station. The colors represent the intensity of some value. It may be the number of animals caught in the traps at the locations or some habitat variable having to do with plant cover or soil type. The reason why it is "not quite true" spatial is that distances among trapping grids is much larger than they are in real life and distances between individual traps isn't always exactly even. Nonetheless it shows spatial patterns in a succinct and compact form.

To make this map all I had to do was create relative row and column X and Y coordinates. That table was then imported into ArcMap as X and Y data. In this example there were a total of 36 rows and 48 columns. Although this workflow could have taken place using R or python I found that it was quite easy to accomplish in ArcMap.

This is a nice reminder of how GIS can be a powerful tool for all sorts of visualizations, not just for maps. Using GIS we can very easily change color schemes using different kinds of classification s (i.e. natural breaks, quantiles, equal intervals, etc.), edit individual lines and polygons, convert from raster to vector formats. Some of this stuff is tedious to do in other types of software.

We're probably all familiar with some examples of "not quite true" spatial maps. Subway maps are a prime example. They are designed to show relative space, but distance isn't always accurate in the true geographic sense. However, we can also take raster GIS outside of the realm of normal geographic space and actually use GIS for displaying things like time series or even data space. More on that later.

The solution? What I call a "not quite true" spatial map. In the figure below we see that each bold box shows a trapping grid and each smaller box is a trapping station. The colors represent the intensity of some value. It may be the number of animals caught in the traps at the locations or some habitat variable having to do with plant cover or soil type. The reason why it is "not quite true" spatial is that distances among trapping grids is much larger than they are in real life and distances between individual traps isn't always exactly even. Nonetheless it shows spatial patterns in a succinct and compact form.

To make this map all I had to do was create relative row and column X and Y coordinates. That table was then imported into ArcMap as X and Y data. In this example there were a total of 36 rows and 48 columns. Although this workflow could have taken place using R or python I found that it was quite easy to accomplish in ArcMap.

This is a nice reminder of how GIS can be a powerful tool for all sorts of visualizations, not just for maps. Using GIS we can very easily change color schemes using different kinds of classification s (i.e. natural breaks, quantiles, equal intervals, etc.), edit individual lines and polygons, convert from raster to vector formats. Some of this stuff is tedious to do in other types of software.

We're probably all familiar with some examples of "not quite true" spatial maps. Subway maps are a prime example. They are designed to show relative space, but distance isn't always accurate in the true geographic sense. However, we can also take raster GIS outside of the realm of normal geographic space and actually use GIS for displaying things like time series or even data space. More on that later.

Labels:

ArcGIS,

cartography,

choropleth,

ecology,

GRID,

polygon,

raster,

sampling,

vector

Wednesday, September 7, 2016

Nevada - last in the nation for LiDAR coverage

As Nevadans we're used to placing last or near last in lots of socioeconomic indicators. According to the USGS 3DEP program we may well be last in another category: LiDAR coverage.

LiDAR or laser altimetry is a valuable technology used for mapping all sorts of things ranging from building heights to flood zones to timber volumes. I've used LiDAR a lot in my own work, and I believe that it is moving beyond a new cool tool to a critical piece of data infrastructure. In fact, a lot of remote sensing products rely strongly on LiDAR. As a state we need to be thinking about investing in LiDAR data if we want to solve the 21st century problems that all of the other states are solving.

LiDAR or laser altimetry is a valuable technology used for mapping all sorts of things ranging from building heights to flood zones to timber volumes. I've used LiDAR a lot in my own work, and I believe that it is moving beyond a new cool tool to a critical piece of data infrastructure. In fact, a lot of remote sensing products rely strongly on LiDAR. As a state we need to be thinking about investing in LiDAR data if we want to solve the 21st century problems that all of the other states are solving.

Labels:

3DEP,

bare earth,

DEM,

hydrology,

last place,

LIDAR,

Nevada,

USGS

Thursday, August 25, 2016

Advertising two positions in our lab - M.S. in remote sensing of cheatgrass die-offs and Post Doc in Riparian Ecology

We are advertising two positions in our lab - one for an M.S. student and the other for a postdoctoral researcher. Read the descriptions HERE

Friday, August 12, 2016

Cheatgrass die-offs the movie

Our lab has been working on accurately mapping a phenomenon known as cheatgrass die-off. Although the exact mechanism responsible for a cheatgrass die-off is still being figured out it is thought that a combination of a fungal pathogen along with certain weather conditions and litter accumulation are responsible for creating these stand failure events. We've been using Landsat to map 35 years of cheatgrass die-off in the Winnemucca area of Nevada. We plan to publish our results in a forthcoming journal article very soon, but in the mean time we have added a link to a movie file (AVI) showing cheatgrass die-off by year. Sit back and enjoy.

Downloads page

Downloads page

Thursday, August 11, 2016

Zonal versus focal statistics

One of the main purposes of a Geographic Information System is to

extract information. We do this all

One of the main purposes of a Geographic Information System is to

extract information. We do this all

One possible solution to his problem was the use of focal statistics rather than zonal statistics for extracting information. This works especially well for buffers around points since the buffers will always be the same size. Focal statistics provide options for rectangle buffers or circular buffers. Focal statistics are nice because if new points are added then the rasters are already there. There is no need to re-run anything other than extracting the values to points. Focal statistics are sensitive to edge effects so be cautious with points located near the boundary of a raster.

Zonal statistics, on the other hand, are preferable in situations with irregularly shaped zones (often polygons) like watersheds or administrative units like counties. Zonal statistics can be made to work with overlapping polygons with a little extra work. Usually this involves unioning the overlapping polygons, finding areas common to more than one point, and then doing some sort of area weighted average.

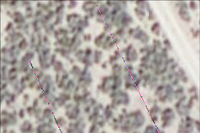

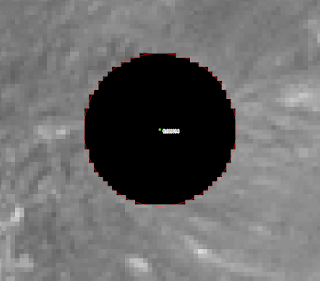

In the examples on the right we have a buffered point and underlying raster (top), buffered point on a focal mean raster (middle), and a buffered point that has had zonal statistics run on it (bottom). Focal statistics yielded a value of 0.189641 and zonal statistics got us 0.189814. The differences are probably due to a single or a small handful of cells that differed as to whether they were included or not in the buffer. I think that for the many applications this small of a discrepancy shouldn't matter.

Tuesday, June 14, 2016

64-bit TIFF images not supported in ArcGIS

Earlier this week I encountered an interesting phenomenon. Some output TIFF images in ArcMap kept displaying like the one below. I tried deleting pyramids, rebuilding pyramids, but to no avail. However, the images displayed perfectly well at < 1:250,000 map scale. Then I noticed that the image was 64-bit depth. It turns out that even though Esri supports 64-bit rasters for display not all functions work properly. I forwarded this to Esri support and it turns out that this is a limitation of the software not a bug. There are plenty of workarounds for this problem. Simply converting to 32-bit works. As does converting to a file geodatabase format. However, I think that having this out there as a known limitation in the blogosphere might do some good. Check out the link below for the list of supported raster formats in ArcGIS 10.3.

http://desktop.arcgis.com/en/arcmap/10.3/manage-data/raster-and-images/supported-raster-dataset-file-formats.htm

http://desktop.arcgis.com/en/arcmap/10.3/manage-data/raster-and-images/supported-raster-dataset-file-formats.htm

Monday, June 13, 2016

Handy tool for converting feature classes (shapefiles) to GPX format

I recently came across this very useful tool for converting feature classes in ArcGIS to GPX files:

https://www.arcgis.com/home/item.html?id=067d6ab392b24497b8466eb8447ea7eb

https://www.arcgis.com/home/item.html?id=067d6ab392b24497b8466eb8447ea7eb

Thursday, June 9, 2016

Multiscale connectivity and graph theory highlight critical areas for conservation under climate change is out in print

Our paper "Multiscale connectivity and graph theory highlight critical areas for conservation under climate change" is now out in print in Ecological Applications.

Dilts, T., Weisberg, P., Leitner, P., Matocq, M. D., Inman, R. D., Nussear, K. E., & Esque, T. (2015). Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications, 26(4): 1223–1237.

I discovered, however, that the link at the end of the article to the supplemental KMZ files is incorrect. They erroneously printed the link as:

http://onlinelibrary.wiley.com/doi/10.1890/15-0925.1/suppinfo

This is a dead link. Instead go to:

http://onlinelibrary.wiley.com/doi/10.1890/15-0925/full

or

https://www.researchgate.net/publication/286490878_Multi-scale_connectivity_and_graph_theory_highlight_critical_areas_for_conservation_under_climate_change

Dilts, T., Weisberg, P., Leitner, P., Matocq, M. D., Inman, R. D., Nussear, K. E., & Esque, T. (2015). Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications, 26(4): 1223–1237.

I discovered, however, that the link at the end of the article to the supplemental KMZ files is incorrect. They erroneously printed the link as:

http://onlinelibrary.wiley.com/doi/10.1890/15-0925.1/suppinfo

This is a dead link. Instead go to:

http://onlinelibrary.wiley.com/doi/10.1890/15-0925/full

or

https://www.researchgate.net/publication/286490878_Multi-scale_connectivity_and_graph_theory_highlight_critical_areas_for_conservation_under_climate_change

Wednesday, June 1, 2016

Impacts of climate change and renewable energy development on habitat of an endemic squirrel, Xerospermophilus mohavensis, in the Mojave Desert, USA accepted in Biological Conservation

Our paper "Impacts of climate change and renewable energy development on habitat of an endemic squirrel, Xerospermophilus mohavensis, in the Mojave Desert, USA" has been accepted in Biological Conservation! This is the third Mohave ground squirrel paper of ours that has been recently accepted. The other two are:

Inman, RD, TC Esque, KE Nussear, P Leitner, MD Matocq, PJ Weisberg, TE Dilts,and AG Vandergast. 2013. Is there room for all of us? Renewable energy and Xerospermophilus mohavensis. Endangered Species Research 20:1-20.

and

Dilts, T., P. Weisberg, P. Leitner, M. Matocq, R. Inman, K. Nussear, and T. Esque. In Review. Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications.

This paper examines climate change effects on Mohave ground squirrel habitat, but also includes realistic dispersal scenarios to understand which portions of the range are most likely to be colonized.

Congratulation Rich Inman on a job well done.

Inman, RD, TC Esque, KE Nussear, P Leitner, MD Matocq, PJ Weisberg, TE Dilts,and AG Vandergast. 2013. Is there room for all of us? Renewable energy and Xerospermophilus mohavensis. Endangered Species Research 20:1-20.

and

Dilts, T., P. Weisberg, P. Leitner, M. Matocq, R. Inman, K. Nussear, and T. Esque. In Review. Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications.

This paper examines climate change effects on Mohave ground squirrel habitat, but also includes realistic dispersal scenarios to understand which portions of the range are most likely to be colonized.

Congratulation Rich Inman on a job well done.

Friday, May 13, 2016

Paper is available online - Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change

Our paper "Multi-scale connectivity and

graph theory highlight critical areas for conservation under climate

change" is now available online HERE .

In this paper we use a combination of circuit theory and graph theory to assess changes to Mohave ground squirrel from proposed solar and wind energy development. Graph theory is used to assess both overall changes in habitat connectivity and to map out core areas and stepping stones that may be important for dispersal.

Current citation:

Dilts, T. E., Weisberg, P. J., Leitner, P., Matocq, M. D., Inman, R. D., Nussear, K. E. and Esque, T. C. (2016), Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications. Accepted Author Manuscript. doi:10.1890/15-0925

In this paper we use a combination of circuit theory and graph theory to assess changes to Mohave ground squirrel from proposed solar and wind energy development. Graph theory is used to assess both overall changes in habitat connectivity and to map out core areas and stepping stones that may be important for dispersal.

Current citation:

Dilts, T. E., Weisberg, P. J., Leitner, P., Matocq, M. D., Inman, R. D., Nussear, K. E. and Esque, T. C. (2016), Multi-scale connectivity and graph theory highlight critical areas for conservation under climate change. Ecological Applications. Accepted Author Manuscript. doi:10.1890/15-0925

Thursday, April 28, 2016

Improvements to Sampling Grid Tools

A neat thing happened this week. A while back I created a new tool called Create Regular Sampling Grid and posted it online HERE . The tool is designed to help guide field workers in systematic grid around user-defined points. The tool is useful for validating coarse-resolution imagery, such as Landsat or MODIS, or for sampling systematically within polygons. Earlier this week I got a message from Duncan Hornby, a long time ArcGIS programmer from the UK, with a slew of awesome suggestions for ways to improve this tool. His suggestions ended up dramatically improving the speed and user interface of the Create Regular Sampling Grid Tool resulting in version 2. The lesson that I learned is that good things can happen with a second pair of eyes, and posting code online is a great idea. I also learned a fair bit about improving the user experience and anticipating and troubleshooting potential problems. Thanks Duncan.

Friday, April 22, 2016

Consider Thiessen polygons as an alternative to heat maps

Recently a colleague asked me to make a heat map for her animal trapping results. I agreed, but then quickly realized that the task was more difficult than it first seemed. In this particular case the individual trapping stations were only 10 meters apart and the distance among trapping grids was 500 meters. It struck me as inappropriate to try to interpolate across such a long distance when the variability within a grid was so great. I wasn't really a fan of just displaying the points either. Somehow they just came across looking messy and imprecise. The solution that I came up with was Thiessen polygons. This is probably the simplest form of interpolation, yet it is extremely powerful visually. In the resulting maps you are still able to see the fine-scale detail of individual trapping locations yet still make out the broad-scale patterns. In this map I've left off the legend to maintain the anonymity for the person that I made the map for. I think that it pretty clearly illustrates the point that I'm trying to make.

Another nice feature of Thiessen polygons is that they are an exact interpolator. That means that the model produces the exact value at the location of the data point. This is not the case with the majority of interpolators, including kriging.

Lesson: if considering heat maps or interpolation look into Thiessen polygons first. It may be the simplest yet best tool for the job.

Another nice feature of Thiessen polygons is that they are an exact interpolator. That means that the model produces the exact value at the location of the data point. This is not the case with the majority of interpolators, including kriging.

Lesson: if considering heat maps or interpolation look into Thiessen polygons first. It may be the simplest yet best tool for the job.

Friday, April 15, 2016

Random forest and ArcScan

Recently I've been helping some colleagues in my department convert some historical maps into contour lines. They are at the stage of using ArcScan to convert old scanned topographic maps into contour lines. Interestingly, ArcScan doesn't make use of multi-band image files, but rather single-band grayscale images. When trying to pull out contour lines as distinct from other types of lines this became problematic. Using a simple band thresholding method browns and grays tended to get confused.

The solution? I used the Marine Geospatial Ecology Tools to perform a random forest classification to separate brown lines from all other colored lines. The results were really impressive. Pretty much all of the gray lines were removed. See the picture below for an illustration of the result.

Original scanned topographic map with contour lines in brown and other lines in black/gray.

The result when using a simple band thresholding approach. Black lines represent cells that will be used in the vectorization process.

The result from the random forest classification plus a small amount of speckle removal using the regiongroup tool in ArcGIS.

The solution? I used the Marine Geospatial Ecology Tools to perform a random forest classification to separate brown lines from all other colored lines. The results were really impressive. Pretty much all of the gray lines were removed. See the picture below for an illustration of the result.

Original scanned topographic map with contour lines in brown and other lines in black/gray.

The result when using a simple band thresholding approach. Black lines represent cells that will be used in the vectorization process.

The result from the random forest classification plus a small amount of speckle removal using the regiongroup tool in ArcGIS.

Thursday, April 7, 2016

Historical ecology of the San Joaquin River Delta

I was having a discussion with a colleague recently and got reminded of some of the historical ecology work that has taken place in California. Robin Grossinger's historical ecology group at the San Francisco Estuary Institute does excellent detective work. Their work results in maps of how landscapes have changed over the past one hundred years or more and is changing how land managers actively manage lands and species. Kudos to Robin Grossinger, Allison Whipple, Erin Beller and other historical ecologists at the San Francisco Estuary Institute for a job well done, for affecting positive change on land management practices and ecosystem restoration, and for elevating the role of historical ecology!

http://www.npr.org/2012/10/07/162393931/restore-california-delta-to-what-exactly

http://www.npr.org/2012/10/07/162393931/restore-california-delta-to-what-exactly

Wednesday, March 23, 2016

New versions of climatic water deficit tools and scripts

Miranda Redmond from our lab has created an R script for running PRISM climate data to generate climatic water deficit data for discrete sites. The functionality is similar to our existing spreadsheet version of the Climatic Water Deficit Toolbox for ArcGIS in that it runs on discrete sites rather than raster cells. You can view all three versions of the tools HERE as well as a whole variety of other tools. We anticipate that there will be improvements to all versions of the climatic water deficit tools in the near future so stay tuned!

Miranda Redmond from our lab has created an R script for running PRISM climate data to generate climatic water deficit data for discrete sites. The functionality is similar to our existing spreadsheet version of the Climatic Water Deficit Toolbox for ArcGIS in that it runs on discrete sites rather than raster cells. You can view all three versions of the tools HERE as well as a whole variety of other tools. We anticipate that there will be improvements to all versions of the climatic water deficit tools in the near future so stay tuned!Friday, March 18, 2016

An under-appreciated method for partitioning data into training and test

Perhaps one of the most under-appreciated tools in ArcGIS is subset features. While there are numerous examples of how to generate random points, which can then be sorted and used to generate fields for subsetting your data why not just do it directly? The subset features tool in the Geostatistical Analyst toolbox does this. Another alternative to check out is the Marine Geospatial Ecology Tools. MGET has numerous tools for doing modeling and is a great resource for quickly running models and bring the results back into ArcMap for display.

Tuesday, March 1, 2016

ArcGIS Idea - side by side catalog windows to make moving files really easy

Esri should allow side by side catalog windows to allow for really easy file moving - http://ideas.arcgis.com/ideaView?id=087E0000000kBwrIAE

Wednesday, February 24, 2016

Joys and tribulations of the mosaic dataset

Recently I've been really enjoying some of the benefits that the new mosaic dataset file format in ArcGIS has to offer. For starters I usually work with large imagery collections that are too large to mosaic into a single file. The ability to seamlessly and virtually mosaic imagery has been refreshing, and I've been satisfied with performance. I'm also enthralled with Google Earth Engine and other cloud-based processing options, but sometimes it is nice to be able to have the data on-hand and useable within desktop GIS.

For all of the advantages of mosaic datasets there are a handful of disadvantages that are not well advertised by Esri. We recently have encountered the message "Distributing mosaic dataset operation across four parallel instances on specified host" followed by the dreaded 99999 error. It didn't take too long to figure out that the large raster tiles (>500 Mb) couldn't be processed on a computer with 8 Gb RAM. When trying it on a computer with 32 Gb RAM the problem was miraculously resolved. Lesson learned: if you are going to be building mosaic datasets do it on a computer with lots of RAM or else use many small tiles.

Our other discovery is the need for full path names. Drive letters are almost never a problem for regular geoprocessing, but when it comes to being able to view the mosaic dataset across different computers with different mapped drive letters it becomes imperative!

For all of the advantages of mosaic datasets there are a handful of disadvantages that are not well advertised by Esri. We recently have encountered the message "Distributing mosaic dataset operation across four parallel instances on specified host" followed by the dreaded 99999 error. It didn't take too long to figure out that the large raster tiles (>500 Mb) couldn't be processed on a computer with 8 Gb RAM. When trying it on a computer with 32 Gb RAM the problem was miraculously resolved. Lesson learned: if you are going to be building mosaic datasets do it on a computer with lots of RAM or else use many small tiles.

Our other discovery is the need for full path names. Drive letters are almost never a problem for regular geoprocessing, but when it comes to being able to view the mosaic dataset across different computers with different mapped drive letters it becomes imperative!

Friday, February 12, 2016

New tool - Create Sampling Grid from Points for ArcGIS v. 1.0

With the advent of high-precision GPS spatially-explicit sampling designs have taken on an increasing importance in ecology and natural resource management. Spatially-explicit sampling regimes are useful for understanding processes such as attraction and repulsion that can be described using point pattern processes. This tool also opens up the possibility of random sampling within a larger grid. For example, users may want to collect field data to scale up to Landsat or MODIS pixels. It may be infeasible to collect data for an entire pixel, so some random sampling of the pixel may be necessary. Similarly there may be vegetation polygons or agricultural fields that the researcher wishes to sample in a random or a systematic manner. Finally, even if the researcher wishes to sample the entire grid having the ability to load center points or corner points onto a GPS and navigate to them may expedite field sampling. The creation of this tool was inspired by the needs of a current ongoing pygmy rabbit research project here in Nevada, Oregon, and Idaho.

This tool allows for the creation of polygons and centroids of polygons based on known points. The known points can be random locations, centroids of features of interest (e.g. polygons of agricultural fields or vegetation polygons), or regular gridded points across a landscape. This tool differs from existing tools, such as the Fishnet tools in ArcGIS, because it does not create a single grid for the entire landscape, but rather creates a local grid centered on each point in the input shapefile. It uses the following formula to achieve this:

(-1*(d/2) -

0.5) + i where d is the dimensions parameter and i is the iteration number. The two images below illustrate a grid with an even number of dimensions (6 on the left) and one with an odd number of dimensions (7 on the right). Both sampling grids are centered on points provided by the user, but the the one on the left has the original point (green) falling on a grid corner. The one on the right has the original point (provided by the user, in green) falling in the center of an individual grid tile. The maroon points were created using the 'Feature Vertices to Points' tool, a standard ArcGIS tool with the advanced license.

Subscribe to:

Posts (Atom)